Ion bombardment of metal surfaces is an important, but poorly understood, nanomanufacturing technique. New research using sophisticated supercomputer simulations has shown what goes on in trillionths of a second. The advance could lead to better ways to predict the phenomenon and more uses of the technique to make new nanoscale products.

PROVIDENCE, R.I. [Brown University] — To modify a metal surface at the scale of atoms and molecules — for instance to refine the wiring in computer chips or the reflective silver in optical components — manufacturers shower it with ions. While the process may seem high-tech and precise, the technique has been limited by the lack of understanding of the underlying physics. In a new study, Brown University engineers modeled noble gas ion bombardments with unprecedented richness, providing long-sought insights into how it works.

The improved understanding could open the door to new technologies, Kim said, such as new approaches to make flexible electronics, biocompatible surfaces for medical devices, and more damage-tolerant and radiation-resistant surfaces. The research applies to so-called “FCC” metals such as copper, silver, gold, nickel, and aluminum. Those metals are crystals made up of cubic arrangements of atoms with one at each corner and one in each cube-face center.

Scientists have been trying to explain the complicated process for decades, and more recently they have begun to try modeling it on computers. Kim said the analysis of the Brown team, including lead author and postdoctoral scholar Sang-Pil Kim, was more sophisticated than previous attempts that focused on a single bombardment event and only isolated point defects within the metal substrate.

“In this work, for the first time, we investigate collective behavior of those defects during ion bombardments in terms of ion-substrate combinations,” Kyung-Suk Kim said.

The new model revealed how ion bombardments can set three main mechanisms into motion in a matter of trillionths of a second. The researchers dubbed the mechanisms “dual layer formation,” “subway-glide mode growth,” and “adatom island eruption.” They are a consequence of how the incoming ions melt the metal and then how it resolidifies with the ions occasionally trapped inside.

When ions hit the metal surface, they penetrate it, knocking away nearby atoms like billiard balls in a process that is akin, at the atomic level, to melting. But rather than merely rolling away, the atoms are more like magnetic billiard balls in that they come back together, or resolidify, albeit in a different order.

Some atoms have been shifted out of place. There are some vacancies in the crystal nearer to the surface, and the atoms there pull together across the empty space, that creates a layer with more tension. Beneath that is a layer with more atoms that have been knocked into it. That crowding of atoms creates compression. Hence there are now two layers with different levels of compression and tension.This “dual layer formation” is the precursor to the “subway-glide mode growth” and “adatom island eruption”.

A hallmark of materials that have been bombarded with ions is that they sometimes produce a pattern of material that seems to have popped up out of the original surface. Previously, Kyung-Suk Kim said, scientists thought displaced atoms would individually just bob back up to the surface like fish killed in an underwater explosion. But what the team’s models show is that these molecular islands are formed by whole clusters of displaced atoms that bond together and appear to glide back up to the surface.

“The process is analogous to people getting on a subway train at suburban stations, and they all come out together to the surface once the train arrives at a downtown station during the morning rush hour,” Kyung-Suk Kim said.

The mechanisms, while offering a new explanation for the effects of ion bombardment, are just the beginning of this research.

“As a next step, I will develop prediction models for nanopattern evolution during ion bombardment which can guide the nanomanufacturing processes,” Sang-Pil Kim said. “This research will also be expanded to other applications such as soft- or hard-materials under extreme conditions.”

In addition to Kyung-Suk Kim and Sang-Pil Kim, other authors include Huck Beng Chew, Eric Chason and Vivek Shenoy.

The research was funded by the Korea Institute of Science and Technology, the U.S. National Science Foundation, and the U.S. Department of Energy. The work used the Extreme Science and Engineering Discovery Environment (XSEDE), which is supported by National Science Foundation grant number OCI-1053575.

People with paralysis control robotic arms using brain-computer interface

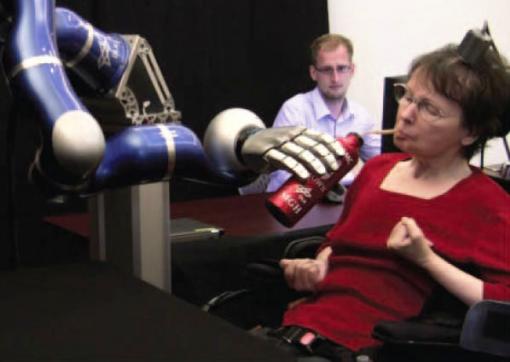

A new study in Nature reports that two people with tetraplegia were able to reach for and grasp objects in three-dimensional space using robotic arms that they controlled directly with brain activity. They used the BrainGate neural interface system, an investigational device currently being studied under an Investigational Device Exemption. One participant used the system to serve herself coffee for the first time since becoming paralyzed nearly 15 years ago.

PROVIDENCE, R.I. [Brown University] — On April 12, 2011, nearly 15 years after she became paralyzed and unable to speak, a woman controlled a robotic arm by thinking about moving her arm and hand to lift a bottle of coffee to her mouth and take a drink. That achievement is one of the advances in brain-computer interfaces, restorative neurotechnology, and assistive robot technology described in the May 17 edition of the journal Nature by the BrainGate2 collaboration of researchers at the Department of Veterans Affairs, Brown University, Massachusetts General Hospital, Harvard Medical School, and the German Aerospace Center (DLR).

A 58-year-old woman (“S3”) and a 66-year-old man (“T2”) participated in the study. They had each been paralyzed by a brainstem stroke years earlier which left them with no functional control of their limbs. In the research, the participants used neural activity to directly control two different robotic arms, one developed by the DLR Institute of Robotics and Mechatronics and the other by DEKA Research and Development Corp., to perform reaching and grasping tasks across a broad three-dimensional space. The BrainGate2 pilot clinical trial employs the investigational BrainGate system initially developed at Brown University, in which a baby aspirin-sized device with a grid of 96 tiny electrodes is implanted in the motor cortex — a part of the brain that is involved in voluntary movement. The electrodes are close enough to individual neurons to record the neural activity associated with intended movement. An external computer translates the pattern of impulses across a population of neurons into commands to operate assistive devices, such as the DLR and DEKA robot arms used in the study now reported in Nature.

BrainGate participants have previously demonstrated neurally based two-dimensional point-and-click control of a cursor on a computer screen and rudimentary control of simple robotic devices.

The study represents the first demonstration and the first peer-reviewed report of people with tetraplegia using brain signals to control a robotic arm in three-dimensional space to complete a task usually performed by their arm. Specifically, S3 and T2 controlled the arms to reach for and grasp foam targets that were placed in front of them using flexible supports. In addition, S3 used the DLR robot to pick up a bottle of coffee, bring it to her mouth, issue a command to tip it, drink through a straw, and return the bottle to the table. Her BrainGate-enabled, robotic-arm control during the drinking task required a combination of two-dimensional movements across a table top plus a “grasp” command to either grasp and lift or tilt the robotic hand.

“Our goal in this research is to develop technology that will restore independence and mobility for people with paralysis or limb loss,” said lead author Dr. Leigh Hochberg, a neuroengineer and critical care neurologist who holds appointments at the Department of Veterans Affairs, Brown University, Massachusetts General Hospital, and Harvard. He is the sponsor-investigator for the BrainGate2 pilot clinical trial. “We have much more work to do, but the encouraging progress of this research is demonstrated not only in the reach-and-grasp data, but even more so in S3’s smile when she served herself coffee of her own volition for the first time in almost 15 years.”

Partial funding for this work comes from the VA, which is committed to improving the lives of injured veterans. “VA is honored to have played a role in this exciting and promising area of research,” said VA Secretary Eric Shinseki. “Today’s announcement represents a great step forward toward improving the quality of life for veterans and others who have either lost limbs or are paralyzed.”

Hochberg adds that even after nearly 15 years, a part of the brain essentially “disconnected” from its original target by a brainstem stroke was still able to direct the complex, multidimensional movement of an external arm — in this case, a robotic limb. The researchers also noted that S3 was able to perform the tasks more than five years after the investigational BrainGate electrode array was implanted. This sets a new benchmark for how long implanted brain-computer interface electrodes have remained viable and provided useful command signals.

John Donoghue, the VA and Brown neuroscientist who pioneered BrainGate more than a decade ago and who is co-senior author of the study, said the paper shows how far the field of brain-computer interfaces has come since the first demonstrations of computer control with BrainGate.

“This paper reports an important advance by rigorously demonstrating in more than one participant that precise three-dimensional neural control of robot arms is not only possible, but also repeatable,” said Donoghue, who directs the Brown Institute for Brain Science. “We’ve moved significantly closer to returning everyday functions, like serving yourself a sip of coffee, usually performed effortlessly by the arm and hand, for people who are unable to move their own limbs. We are also encouraged to see useful control more than five years after implant of the BrainGate array in one of our participants. This work is a critical step toward realizing the long-term goal of creating a neurotechnology that will restore movement, control, and independence to people with paralysis or limb loss.”

In the research, the robots acted as a substitute for each participant’s paralyzed arm. The robotic arms responded to the participants’ intent to move as they imagined reaching for each foam target. The robot hand grasped the target when the participants imagined a hand squeeze. Because the diameter of the targets was more than half the width of the robot hand openings, the task required the participants to exert precise control. (Videos of these actions are available on the Nature website.)

In 158 trials over four days, S3 was able to touch the target within an allotted time in 48.8 percent of the cases using the DLR robotic arm and hand and 69.2 percent of the cases with the DEKA arm and hand, which has the wider grasp. In 45 trials using the DEKA arm, T2 touched the target 95.6 percent of the time. Of the successful touches, S3 grasped the target 43.6 percent of the time with the DLR arm and 66.7 percent of the time with the DEKA arm. T2’s grasp succeeded 62.2 percent of the time.

T2 performed the session in this study on his fourth day of interacting with the arm; the prior three sessions were focused on system development. Using his eyes to indicate each letter, he later described his control of the arm: “I just imagined moving my own arm and the [DEKA] arm moved where I wanted it to go.”

The study used two advanced robotic arms: the DLR Light-Weight Robot III with DLR five-fingered hand and the DEKA Arm System. The DLR LWR-III, which is designed to assist in recreating actions like the human arm and hand and to interact with human users, could be valuable as an assistive robotic device for people with various disabilities. Patrick van der Smagt, head of bionics and assistive robotics at DLR, director of biomimetic robotics and machine learning labs at DLR and the Technische Universität München, and a co-senior author on the paper said: “This is what we were hoping for with this arm. We wanted to create an arm that could be used intuitively by varying forms of control. The arm is already in use by numerous research labs around the world who use its unique interaction and safety capabilities. This is a compelling demonstration of the potential utility of the arm by a person with paralysis.”

DEKA Research and Development developed the DEKA Arm System for amputees, through funding from the United States Defense Advanced Research Projects Agency (DARPA). Dean Kamen, founder of DEKA said, “One of our dreams for the Luke Arm [as the DEKA Arm System is known informally] since its inception has been to provide a limb that could be operated not only by external sensors, but also by more directly thought-driven control. We’re pleased about these results and for the continued research being done by the group at the VA, Brown and MGH.” The research is aimed at learning how the DEKA arm might be controlled directly from the brain, potentially allowing amputees to more naturally control this prosthetic limb.

Over the last two years, VA has been conducting an optimization study of the DEKA prosthetic arm at several sites, with the cooperation of veterans and active duty service members who have lost an arm. Feedback from the study is helping DEKA engineers to refine the artificial arm’s design and function. “Brain-computer interfaces, such as BrainGate, have the potential to provide an unprecedented level of functional control over prosthetic arms of the future,” said Joel Kupersmith, M.D., VA chief research and development officer. “This innovation is an example of federal collaboration at its finest.”

Story Landis, director of the National Institute of Neurological Disorders and Stroke, which funded the work in part, noted: “This technology was made possible by decades of investment and research into how the brain controls movement. It’s been thrilling to see the technology evolve from studies of basic neurophysiology and move into clinical trials, where it is showing significant promise for people with brain injuries and disorders.”

In addition to Hochberg, Donoghue, and van der Smagt, other authors on the paper are Daniel Bacher, Beata Jarosiewicz, Nicolas Masse, John Simeral, Joern Vogel, Sami Haddadin, Jie Liu, and Sydney Cash.

Medical Center Director, Providence VA Medical Center

“The VA is on the forefront of translational research that’s improving the quality of life for our Veterans who have sacrificed so much for our Nation. We are proud to be a part of this exciting, collaborative research.”

U.S. Sen. Sheldon Whitehouse

“I congratulate Brown University and the Providence VA Medical Center for this ground-breaking project, which could help to significantly improve the quality of life of disabled and paralyzed Americans, including many veterans. The innovations produced in this new study highlight the value of federal support for basic scientific research.”

U.S. Rep. David Cicilline

“I congratulate the entire Brown University community on the progress it has made in this project. It is my hope that with continued success, this advancement will help improve the quality of life for individuals with disabilities, especially our men and women in uniform.”

Jennifer French

Executive Director, Neurotech Network

“This latest development in cortical control research has the potential to revolutionize the way we interact with technology. More specifically, the possibilities open a new level of independence for those living with severe paralysis. Simple tasks like drinking, eating or brushing your teeth are not possible for people living with severe paralysis. The ability to perform these every-day tasks can create a new world of independence for people with severe disabilities.”

R. John Davenport

Associate Director, Brown University Institute for Brain Science

“This exciting advance from the BrainGate team exemplifies the amazing science that can only result when researchers from disparate disciplines collaborate. The Institute works to link fundamental science, engineering, and medicine among our more than 100 faculty members.”

The BrainGate2 study continues to enroll participants to take part in this research and recently added Stanford University as a member of the collaboration and a clinical trial site.

About the BrainGate collaboration

This advance is the result of the ongoing collaborative BrainGate research at Brown University, Massachusetts General Hospital, Providence VA Medical Center; researchers at Stanford University have recently joined the collaboration as well. The BrainGate research team is focused on developing and testing neuroscientifically inspired technologies to improve the communication, mobility, and independence of people with neurologic disorders, injury, or limb loss.

Funding for the study and its projects comes from the Rehabilitation Research and Development Service, Office of Research and Development, U.S. Department of Veterans Affairs, the National Institutes of Health (some grants were funded all or in part through the American Recovery and Reinvestment Act), the Eunice Kennedy Shriver National Institute of Child Health and Human Development/National Center for Medical Rehabilitation Research (HD53403, HD100018, HD063931), the National Institute on Deafness and Other Communication Disorders, the National Institute of Neurological Disorders and Stroke (NS025074), the National Institute of Biomedical Imaging and Bioengineering (EB007401), the Doris Duke Charitable Foundation, the MGH-Deane Institute for Integrated Research on Atrial Fibrillation and Stroke, Katie Samson Foundation, and the Craig H. Neilsen Foundation. The contents do not represent the official views of the Department of Veterans Affairs or the United States Government.

The implanted microelectrode array and associated neural recording hardware used in the BrainGate research are manufactured by BlackRock Microsystems LLC (Salt Lake City, Utah). The research prototype Gen2 DEKAarm was provided by DEKA Integrated Solutions Inc, under contract from the Defense Advanced Research Project Agency (DARPA).

The BrainGate pilot clinical trial was previously directed by Cyberkinetics Neurotechnology Systems Inc. Foxborough, Mass., (CKI). CKI ceased operations in 2009, before the collection of data reported in the Nature manuscript. The clinical trials of the BrainGate2 Neural Interface System are now administered by Massachusetts General Hospital, Boston, Mass. Donoghue is a former chief scientific officer and a former director of CKI; he held stocks and received compensation. Hochberg received research support from Massachusetts General and Spaulding Rehabilitation Hospitals, which in turn received clinical trial support from Cyberkinetics.

CAUTION: Investigational Device. Limited by Federal Law to Investigational Use. The device is being studied under an IDE for the detection and transmission of neural signals from the cortex to externally powered communication systems, environmental control systems, and assistive devices by persons unable to use their hands due to physical impairment. The clinical trial is ongoing; results presented are thus preliminary. The safety and effectiveness of the device have not been established.

Press contacts

David Orenstein, Brown University, david_orenstein@brown.edu, 401-527-2525

Mark Ballesteros, U.S. Dept. of Veterans Affairs, Mark.Ballesteros@va.gov, 202-461-7559.

Michael Morrison, Massachusetts General Hospital, mdmorrison@partners.org, 617-724-6425

PROVIDENCE, R.I. [Brown University] — On April 12, 2011, nearly 15 years after she became paralyzed and unable to speak, a woman controlled a robotic arm by thinking about moving her arm and hand to lift a bottle of coffee to her mouth and take a drink. That achievement is one of the advances in brain-computer interfaces, restorative neurotechnology, and assistive robot technology described in the May 17 edition of the journal Nature by the BrainGate2 collaboration of researchers at the Department of Veterans Affairs, Brown University, Massachusetts General Hospital, Harvard Medical School, and the German Aerospace Center (DLR).

A 58-year-old woman (“S3”) and a 66-year-old man (“T2”) participated in the study. They had each been paralyzed by a brainstem stroke years earlier which left them with no functional control of their limbs. In the research, the participants used neural activity to directly control two different robotic arms, one developed by the DLR Institute of Robotics and Mechatronics and the other by DEKA Research and Development Corp., to perform reaching and grasping tasks across a broad three-dimensional space. The BrainGate2 pilot clinical trial employs the investigational BrainGate system initially developed at Brown University, in which a baby aspirin-sized device with a grid of 96 tiny electrodes is implanted in the motor cortex — a part of the brain that is involved in voluntary movement. The electrodes are close enough to individual neurons to record the neural activity associated with intended movement. An external computer translates the pattern of impulses across a population of neurons into commands to operate assistive devices, such as the DLR and DEKA robot arms used in the study now reported in Nature.

BrainGate participants have previously demonstrated neurally based two-dimensional point-and-click control of a cursor on a computer screen and rudimentary control of simple robotic devices.

The study represents the first demonstration and the first peer-reviewed report of people with tetraplegia using brain signals to control a robotic arm in three-dimensional space to complete a task usually performed by their arm. Specifically, S3 and T2 controlled the arms to reach for and grasp foam targets that were placed in front of them using flexible supports. In addition, S3 used the DLR robot to pick up a bottle of coffee, bring it to her mouth, issue a command to tip it, drink through a straw, and return the bottle to the table. Her BrainGate-enabled, robotic-arm control during the drinking task required a combination of two-dimensional movements across a table top plus a “grasp” command to either grasp and lift or tilt the robotic hand.

“Our goal in this research is to develop technology that will restore independence and mobility for people with paralysis or limb loss,” said lead author Dr. Leigh Hochberg, a neuroengineer and critical care neurologist who holds appointments at the Department of Veterans Affairs, Brown University, Massachusetts General Hospital, and Harvard. He is the sponsor-investigator for the BrainGate2 pilot clinical trial. “We have much more work to do, but the encouraging progress of this research is demonstrated not only in the reach-and-grasp data, but even more so in S3’s smile when she served herself coffee of her own volition for the first time in almost 15 years.”

Partial funding for this work comes from the VA, which is committed to improving the lives of injured veterans. “VA is honored to have played a role in this exciting and promising area of research,” said VA Secretary Eric Shinseki. “Today’s announcement represents a great step forward toward improving the quality of life for veterans and others who have either lost limbs or are paralyzed.”

Hochberg adds that even after nearly 15 years, a part of the brain essentially “disconnected” from its original target by a brainstem stroke was still able to direct the complex, multidimensional movement of an external arm — in this case, a robotic limb. The researchers also noted that S3 was able to perform the tasks more than five years after the investigational BrainGate electrode array was implanted. This sets a new benchmark for how long implanted brain-computer interface electrodes have remained viable and provided useful command signals.

John Donoghue, the VA and Brown neuroscientist who pioneered BrainGate more than a decade ago and who is co-senior author of the study, said the paper shows how far the field of brain-computer interfaces has come since the first demonstrations of computer control with BrainGate.

“This paper reports an important advance by rigorously demonstrating in more than one participant that precise three-dimensional neural control of robot arms is not only possible, but also repeatable,” said Donoghue, who directs the Brown Institute for Brain Science. “We’ve moved significantly closer to returning everyday functions, like serving yourself a sip of coffee, usually performed effortlessly by the arm and hand, for people who are unable to move their own limbs. We are also encouraged to see useful control more than five years after implant of the BrainGate array in one of our participants. This work is a critical step toward realizing the long-term goal of creating a neurotechnology that will restore movement, control, and independence to people with paralysis or limb loss.”

In the research, the robots acted as a substitute for each participant’s paralyzed arm. The robotic arms responded to the participants’ intent to move as they imagined reaching for each foam target. The robot hand grasped the target when the participants imagined a hand squeeze. Because the diameter of the targets was more than half the width of the robot hand openings, the task required the participants to exert precise control. (Videos of these actions are available on the Nature website.)

In 158 trials over four days, S3 was able to touch the target within an allotted time in 48.8 percent of the cases using the DLR robotic arm and hand and 69.2 percent of the cases with the DEKA arm and hand, which has the wider grasp. In 45 trials using the DEKA arm, T2 touched the target 95.6 percent of the time. Of the successful touches, S3 grasped the target 43.6 percent of the time with the DLR arm and 66.7 percent of the time with the DEKA arm. T2’s grasp succeeded 62.2 percent of the time.

T2 performed the session in this study on his fourth day of interacting with the arm; the prior three sessions were focused on system development. Using his eyes to indicate each letter, he later described his control of the arm: “I just imagined moving my own arm and the [DEKA] arm moved where I wanted it to go.”

The study used two advanced robotic arms: the DLR Light-Weight Robot III with DLR five-fingered hand and the DEKA Arm System. The DLR LWR-III, which is designed to assist in recreating actions like the human arm and hand and to interact with human users, could be valuable as an assistive robotic device for people with various disabilities. Patrick van der Smagt, head of bionics and assistive robotics at DLR, director of biomimetic robotics and machine learning labs at DLR and the Technische Universität München, and a co-senior author on the paper said: “This is what we were hoping for with this arm. We wanted to create an arm that could be used intuitively by varying forms of control. The arm is already in use by numerous research labs around the world who use its unique interaction and safety capabilities. This is a compelling demonstration of the potential utility of the arm by a person with paralysis.”

DEKA Research and Development developed the DEKA Arm System for amputees, through funding from the United States Defense Advanced Research Projects Agency (DARPA). Dean Kamen, founder of DEKA said, “One of our dreams for the Luke Arm [as the DEKA Arm System is known informally] since its inception has been to provide a limb that could be operated not only by external sensors, but also by more directly thought-driven control. We’re pleased about these results and for the continued research being done by the group at the VA, Brown and MGH.” The research is aimed at learning how the DEKA arm might be controlled directly from the brain, potentially allowing amputees to more naturally control this prosthetic limb.

Over the last two years, VA has been conducting an optimization study of the DEKA prosthetic arm at several sites, with the cooperation of veterans and active duty service members who have lost an arm. Feedback from the study is helping DEKA engineers to refine the artificial arm’s design and function. “Brain-computer interfaces, such as BrainGate, have the potential to provide an unprecedented level of functional control over prosthetic arms of the future,” said Joel Kupersmith, M.D., VA chief research and development officer. “This innovation is an example of federal collaboration at its finest.”

Story Landis, director of the National Institute of Neurological Disorders and Stroke, which funded the work in part, noted: “This technology was made possible by decades of investment and research into how the brain controls movement. It’s been thrilling to see the technology evolve from studies of basic neurophysiology and move into clinical trials, where it is showing significant promise for people with brain injuries and disorders.”

In addition to Hochberg, Donoghue, and van der Smagt, other authors on the paper are Daniel Bacher, Beata Jarosiewicz, Nicolas Masse, John Simeral, Joern Vogel, Sami Haddadin, Jie Liu, and Sydney Cash.

Additional comments

Vincent NgMedical Center Director, Providence VA Medical Center

“The VA is on the forefront of translational research that’s improving the quality of life for our Veterans who have sacrificed so much for our Nation. We are proud to be a part of this exciting, collaborative research.”

U.S. Sen. Sheldon Whitehouse

“I congratulate Brown University and the Providence VA Medical Center for this ground-breaking project, which could help to significantly improve the quality of life of disabled and paralyzed Americans, including many veterans. The innovations produced in this new study highlight the value of federal support for basic scientific research.”

U.S. Rep. David Cicilline

“I congratulate the entire Brown University community on the progress it has made in this project. It is my hope that with continued success, this advancement will help improve the quality of life for individuals with disabilities, especially our men and women in uniform.”

Jennifer French

Executive Director, Neurotech Network

“This latest development in cortical control research has the potential to revolutionize the way we interact with technology. More specifically, the possibilities open a new level of independence for those living with severe paralysis. Simple tasks like drinking, eating or brushing your teeth are not possible for people living with severe paralysis. The ability to perform these every-day tasks can create a new world of independence for people with severe disabilities.”

R. John Davenport

Associate Director, Brown University Institute for Brain Science

“This exciting advance from the BrainGate team exemplifies the amazing science that can only result when researchers from disparate disciplines collaborate. The Institute works to link fundamental science, engineering, and medicine among our more than 100 faculty members.”

The BrainGate2 study continues to enroll participants to take part in this research and recently added Stanford University as a member of the collaboration and a clinical trial site.

About the BrainGate collaboration

This advance is the result of the ongoing collaborative BrainGate research at Brown University, Massachusetts General Hospital, Providence VA Medical Center; researchers at Stanford University have recently joined the collaboration as well. The BrainGate research team is focused on developing and testing neuroscientifically inspired technologies to improve the communication, mobility, and independence of people with neurologic disorders, injury, or limb loss.

Funding for the study and its projects comes from the Rehabilitation Research and Development Service, Office of Research and Development, U.S. Department of Veterans Affairs, the National Institutes of Health (some grants were funded all or in part through the American Recovery and Reinvestment Act), the Eunice Kennedy Shriver National Institute of Child Health and Human Development/National Center for Medical Rehabilitation Research (HD53403, HD100018, HD063931), the National Institute on Deafness and Other Communication Disorders, the National Institute of Neurological Disorders and Stroke (NS025074), the National Institute of Biomedical Imaging and Bioengineering (EB007401), the Doris Duke Charitable Foundation, the MGH-Deane Institute for Integrated Research on Atrial Fibrillation and Stroke, Katie Samson Foundation, and the Craig H. Neilsen Foundation. The contents do not represent the official views of the Department of Veterans Affairs or the United States Government.

The implanted microelectrode array and associated neural recording hardware used in the BrainGate research are manufactured by BlackRock Microsystems LLC (Salt Lake City, Utah). The research prototype Gen2 DEKAarm was provided by DEKA Integrated Solutions Inc, under contract from the Defense Advanced Research Project Agency (DARPA).

The BrainGate pilot clinical trial was previously directed by Cyberkinetics Neurotechnology Systems Inc. Foxborough, Mass., (CKI). CKI ceased operations in 2009, before the collection of data reported in the Nature manuscript. The clinical trials of the BrainGate2 Neural Interface System are now administered by Massachusetts General Hospital, Boston, Mass. Donoghue is a former chief scientific officer and a former director of CKI; he held stocks and received compensation. Hochberg received research support from Massachusetts General and Spaulding Rehabilitation Hospitals, which in turn received clinical trial support from Cyberkinetics.

CAUTION: Investigational Device. Limited by Federal Law to Investigational Use. The device is being studied under an IDE for the detection and transmission of neural signals from the cortex to externally powered communication systems, environmental control systems, and assistive devices by persons unable to use their hands due to physical impairment. The clinical trial is ongoing; results presented are thus preliminary. The safety and effectiveness of the device have not been established.

Press contacts

David Orenstein, Brown University, david_orenstein@brown.edu, 401-527-2525

Mark Ballesteros, U.S. Dept. of Veterans Affairs, Mark.Ballesteros@va.gov, 202-461-7559.

Michael Morrison, Massachusetts General Hospital, mdmorrison@partners.org, 617-724-6425

Brian Reggiannini figures out who’s talking

If computers could become ‘smart’ enough to recognize who is talking, that could allow them to produce real-time transcripts of meetings, courtroom proceedings, debates, and other important events. In the dissertation that will allow him to receive his Ph.D. at Commencement this year, Brian Reggiannini found a way to advance the state of the art for voice- and speaker-recognition.

Very few of us, however, could ever get a computer to do anything like that. That’s why doing it well has earned Brian Reggiannini a Ph.D. at Brown and a career in the industry.

In his dissertation, Reggiannini managed to raise the bar for how well a computer connected to a roomful of microphones can keep track of who among a small group of speakers is talking. Further refined and combined with speech recognition, such a system could lead to instantaneous transcriptions of meetings, courtroom proceedings, or debates among, say, several rude political candidates who are prone to interrupt. It could help the deaf follow conversations in real-time.

If only it weren’t so hard to do.

Brian Reggiannini

Brian Reggiannini

“We’re trying to teach a computer how to do something that we as humans do so naturally that we don’t even understand how we do it.”

Very few of us, however, could ever get a computer to do anything like that. That’s why doing it well has earned Brian Reggiannini a Ph.D. at Brown and a career in the industry.

In his dissertation, Reggiannini managed to raise the bar for how well a computer connected to a roomful of microphones can keep track of who among a small group of speakers is talking. Further refined and combined with speech recognition, such a system could lead to instantaneous transcriptions of meetings, courtroom proceedings, or debates among, say, several rude political candidates who are prone to interrupt. It could help the deaf follow conversations in real-time.

If only it weren’t so hard to do.

Brian Reggiannini

Brian Reggiannini“We’re trying to teach a computer how to do something that we as humans do so naturally that we don’t even understand how we do it.”

But Reggiannini, who came to Brown as an undergraduate in 2003 and began building microphone arrays in the lab of Harvey Silverman, professor of engineering, in his junior year, was determined to advance the state of the art.

The specific challenge he set for himself was real-time tracking of who’s talking among at least a few people who are free to rove around a room. Hardware was not the issue. The test room on campus has 448 microphones all around the walls and he only used 96. That was enough to gather the kind of information that allows systems – think of your two ears – to locate the source of a sound.

The real rub was in devising the algorithms and, more abstractly, in realizing where his reasoning about the problem had to abandon the conventional wisdom.

Previous engineers who had tried something like this were on the right track. After all, there is only so much data available in situations like this. Some tried analyzing accents, pronunciation, word use, and cadence, but those are complex to track and require a lot of data. The simpler features are pitch, volume, and spectral statistics (a breakdown of a voice’s component waves and frequencies) of each speaker’s voice. Systems can also ascertain where a voice came from within the room.

Snippets, not speakers

But many attempts to build speaker identification systems (like the voice recognition in your personal computer) have relied on the idea that a computer could be extensively trained in “clean,” quiet conditions to learn a speaker’s voice in advance.

One of Reggiannini’s key insights was that just like a politician couldn’t possibly be primed to recognize every voter at a rally, it’s unrealistic to train a speaker-recognition system with the voice of everyone who could conceivably walk into a room.

Instead, Reggiannini sought to build a system that could learn to distinguish the voices of anyone within a session. It analyzes each new segment of speech and also notes the distinct physical position of individuals within the room. The system compares each new segment, or snippet, of what it hears to previous snippets. It then determines a statistical likelihood that the new snippet would have come from a speaker it has already identified as unique.

“Instead of modeling talkers, I’m going to instead model pairs of speech segments,” Reggiannini recalled.

A key characteristic of Reggiannini’s system is that it can work with very short snippets of speech. It doesn’t need full sentences to work at least somewhat well. That’s important because it’s realistic. People don’t speak in florid monologues. They speak in fractured conversations. No way! Yes, really.

People also are known to move around. For that reason position as inferred by the array of microphones can be only an intermittent asset. At any single moment in time, especially at the beginning of a session, position helpfully distinguishes each talker from every other (no two people can be in the same place at the same time), but when people stop talking and start walking, the system necessarily loses track of them until they speak again.

Reggiannini tested his system every step of the way. His experiments included just pitch analysis, just spectral analysis, a combination of the two, position alone, and a combination of the full speech analysis and position tracking. He subjected the system to a multitude of voices, sometimes male-only, sometimes female-only, and sometimes mixed. In every case, at least until the speech snippets became quite long, his system was better able to discriminate among talkers than two other standard approaches.

That said, the system sometimes is uncertain and in cases like that it defers assigning speech to a talker until it is more certain. Once it is, it goes back and labels the snippets accordingly.

It’s no surprise that the system would err, or hedge, here and there. Reggiannini’s test room was noisy. While some systems are fed very clean audio, the only major concessions that Reggiannini allowed himself were that speakers wouldn’t run or jump across the room and that only one would speak from the script at a time. The ability to filter individual voices out from within overlapping speech is perhaps the biggest remaining barrier between the system remaining a research project and becoming a commercial success.

A career in the field

While the ultimate fate of Reggiannini’s innovations is not yet clear, what is certain is that he has been able to embark on a career in the field he loves. Since leaving Brown last summer he’s been working as a digital signal processing engineer at Analog Devices in Norwood, Mass., which happens to be his hometown.

Reggiannini has yet to work on an audio project, but that’s fine with him. His interest is the signal processing, not sound per se. Instead he’s applied his expertise to challenges of heart monitoring and wireless communications.

“I’ve been jumping around applications but all the fundamental signal processing theory applies no matter what the signal is,” he said. “My background lets me work on a wide range of problems.”

After seven years and three degrees at Brown, Reggiannini was prepared to pursue his passion.

- by David Orenstein

- by David Orenstein

0

comments

Labels:

Reggiannini,

Silverman

Brown Team Wins Rhode Island Business Plan Competition For Fourth Straight Year

For the fourth consecutive year, a Brown student team has won the Rhode Island Business Plan Competition. This year, Overhead.fm, led by Stephen Hebson ’12, an economics and history double concentrator, and Parker Wells ’12, a mechanical engineering concentrator, won the student track of the competition. Their plan is to produce a web app to provide a customizable stream of music for in-store use, licensing music directly from the artists and labels. They received $15,000 in cash and services valued at $24,000 for winning the competition. Winners were announced at the annual RIBX business expo at the Rhode Island Convention Center. In order to be eligible to win, applicants had to agree to establish or maintain operations in Rhode Island.

Previous Brown winners of the competition have included PriWater, now Premama, (2011), Speramus (2010), and Runa (2009). Premama (http://drinkpremama.com/) produces a prenatal beverage supplement to help reduce birth defects. Speramus is (www.speramus.com) an online fundraising platform that matches donors with individual support opportunities. Runa (www.runa.org) produces energy drinks made from the leaves of an Amazonian tree, and has been featured in the New York Times and raised over $1 million from investors and has increased distribution into Whole Foods.

Previous Brown winners of the competition have included PriWater, now Premama, (2011), Speramus (2010), and Runa (2009). Premama (http://drinkpremama.com/) produces a prenatal beverage supplement to help reduce birth defects. Speramus is (www.speramus.com) an online fundraising platform that matches donors with individual support opportunities. Runa (www.runa.org) produces energy drinks made from the leaves of an Amazonian tree, and has been featured in the New York Times and raised over $1 million from investors and has increased distribution into Whole Foods.

Two other Brown teams, both with connections to the School of Engineering, were also named finalists at the 2012 competition. Finalists receive $5,000 in cash and services valued at between $9,000 and $11,500.

One of the finalists, JCD Wind, was from Steve Petteruti’s Entrepreneurship I class and Entrepreneurship II classes, Engineering 1930G and Engineering 1930H. JCD Wind, included James McGinn ’12, a biomedical engineering concentrator, and Carli Wiesenfeld ’12, a commerce, organizations, and entrepreneurship (COE) concentrator. The company aims to make seamless, high strength lightweight carbon fiber turbine blades. McGinn is also a member of the men’s rugby team, and Wiesenfeld is a member of the women’s gymnastics team.

The other finalist was a group of four graduate students in the Program in Innovation Management and Entrepreneurship (PRIME) master’s degree program. Solar4Cents included PRIME students Sean Pennino, Bhavuk Nagpal, Xiaotong 'Peter' Shan, and Meng 'Milo' Zhang. Solar4Cents is a manufacturing company that aims to produce low-cost, thin-film copper, zinc, tin, and sulfur solar cells for solar panel manufacturers.

Established in 2000, the Rhode Island Business Plan Competition has awarded more than $1 million in prizes since its inception.

For the official RI Business plan release on the competition, please go to:

http://www.ri-bizplan.com/tabid/259/Default.aspx

Previous Brown winners of the competition have included PriWater, now Premama, (2011), Speramus (2010), and Runa (2009). Premama (http://drinkpremama.com/) produces a prenatal beverage supplement to help reduce birth defects. Speramus is (www.speramus.com) an online fundraising platform that matches donors with individual support opportunities. Runa (www.runa.org) produces energy drinks made from the leaves of an Amazonian tree, and has been featured in the New York Times and raised over $1 million from investors and has increased distribution into Whole Foods.

Previous Brown winners of the competition have included PriWater, now Premama, (2011), Speramus (2010), and Runa (2009). Premama (http://drinkpremama.com/) produces a prenatal beverage supplement to help reduce birth defects. Speramus is (www.speramus.com) an online fundraising platform that matches donors with individual support opportunities. Runa (www.runa.org) produces energy drinks made from the leaves of an Amazonian tree, and has been featured in the New York Times and raised over $1 million from investors and has increased distribution into Whole Foods. Two other Brown teams, both with connections to the School of Engineering, were also named finalists at the 2012 competition. Finalists receive $5,000 in cash and services valued at between $9,000 and $11,500.

One of the finalists, JCD Wind, was from Steve Petteruti’s Entrepreneurship I class and Entrepreneurship II classes, Engineering 1930G and Engineering 1930H. JCD Wind, included James McGinn ’12, a biomedical engineering concentrator, and Carli Wiesenfeld ’12, a commerce, organizations, and entrepreneurship (COE) concentrator. The company aims to make seamless, high strength lightweight carbon fiber turbine blades. McGinn is also a member of the men’s rugby team, and Wiesenfeld is a member of the women’s gymnastics team.

The other finalist was a group of four graduate students in the Program in Innovation Management and Entrepreneurship (PRIME) master’s degree program. Solar4Cents included PRIME students Sean Pennino, Bhavuk Nagpal, Xiaotong 'Peter' Shan, and Meng 'Milo' Zhang. Solar4Cents is a manufacturing company that aims to produce low-cost, thin-film copper, zinc, tin, and sulfur solar cells for solar panel manufacturers.

Established in 2000, the Rhode Island Business Plan Competition has awarded more than $1 million in prizes since its inception.

For the official RI Business plan release on the competition, please go to:

http://www.ri-bizplan.com/tabid/259/Default.aspx

0

comments

Labels:

competition,

JCD Wind,

overhead.fm,

petteruti,

Solar4Cents